The Music Interaction and Computational Arts (MICA) Lab is an inclusive research group that develops and evaluates novel technologies for creative expression. The lab is located in The Bridge: a cutting-edge creative technology facility funded by the Arts and Humanities Research Council (AHRC), the West of England Combined Authority (WECA) and UWE, Bristol. We will be recruiting a research fellow in Digital Music Interaction and and a fully funded PhD in 2025. We are always on the lookout for new projects, collaborations and opportunities. Please contact tom.mitchell@uwe.ac.uk

AI and Wearables October 2025 studentship

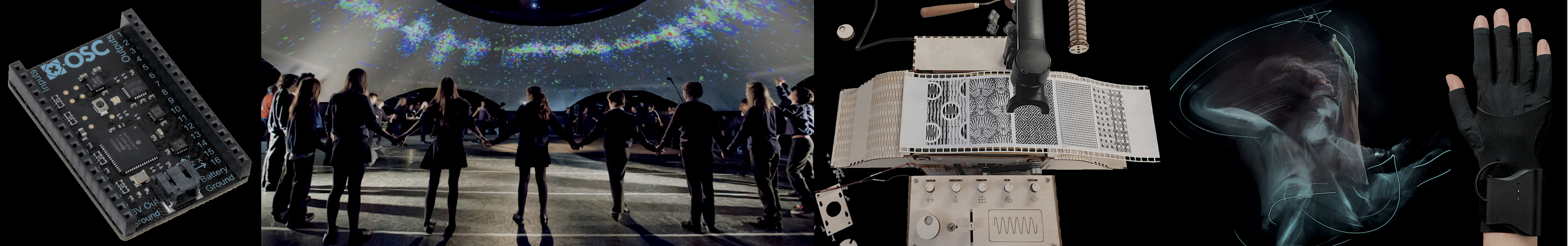

The Outside Interactions project is supported by a UKRI Future Leaders Fellowship and will explore a novel approach to human computer interaction along with new methods for co-designing digital musical instruments (DMIs) with non-technical and physically impaired musicians. The project takes a disruptive and inclusive approach that that will reshape the fundamental practice of DMI design, inviting broader participation in the development of new musical instruments and future visions of musicianship.

Motion capture and gestural interaction technologies have shifted the locus of interaction away from physical objects and onto the body, realising human movement as the interface for performing music. The development of musical objects and gestural music interaction are often considered and pursued separately. By focusing on musical objects, instrument development is often framed as a technical challenge assuming normative players and overlooking the diversity of musicians, audiences, and their environments. Gestural music interaction has shown great potential for music performance and accessibility; however, it neglects the significant role of tactile feedback in music training and the development of virtuosic performance.

The Outside Interactions project will take a holistic approach, coupling gestural interaction and physical prototyping, departing from the conventional practice of embedding sensing technologies within an instrument and relocating the technology onto the body, sensing player interactions using wearable devices on the wrist and hand. A radical switch in both technical and design approach that opens innovative new research directions for low-cost, rapidly produced musical instruments, which can be designed to have any shape, scale or structure. Crucially, this provides new ways for people to participate in the rapid co-design of novel, customised musical instruments that are tailored to their unique artistic identity and access requirements.

MICA Lab, UWE, Bristol

Frenchay Campus, Coldharbour Lane

Stoke Gifford BS16 1QY

Bristol, UK

tom.mitchell@uwe.ac.uk